Geographical Erasure in Language Generation

Last year was a big year for learning about large language models (LLMs), and about the ethical issues they raise. In our EMNLP 2023 Findings paper Geographical Erasure in Language Generation, AWS colleagues and I study whether LLMs are geographically inclusive. They’re not – many countries are mentioned much less often than what their population would imply.

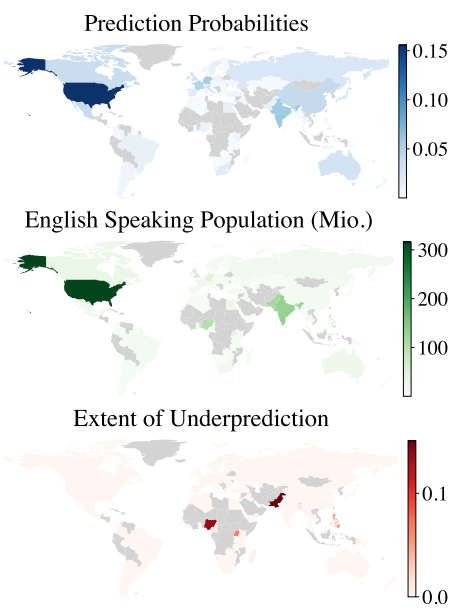

Some countries are vastly underpredicted

compared to their English speaking populations. Top:

Country probabilities assigned by GPT-NeoX when

prompted with “I live in”. Middle: English speaking

populations per country. Bottom: Countries experiencing erasure, i.e. underprediction compared to their population by at least a factor 3 (see §3 of the paper). Data is missing

for grey countries (see §6 of the paper).

Resources:

- The paper.

- The code is open source, find it here.

- A talk about the work at Merantix Momentum in Berlin (sorry, not the best audio!):